Machine learning vs. LLM-native AI and why it matters for healthcare

The emergence of large language models like chatGPT and Claude has fundamentally changed how value can be created in healthcare using AI. But with this technology comes a new rulebook.

Previous AI approaches were characterized by using datasets to train models for specific use cases and then deploying them within existing workflows. With LLM-native approaches, the focus is on stitching together sequential LLM actions, with minimal need for the initial datasets.

What once required months of data collection, feature engineering and model training can now be achieved in an afternoon with a well orchestrated sequence of prompts.

But with new LLM capabilities comes new challenges. There’s a right way to build with LLMs and a wrong way. The paradigms for leveraging traditional AI approaches don’t work in our new LLM era. The future belongs to those that leverage the LLM-native approach.

So how did we get here, what’s an LLM-native approach, and what should we do about it?

We're in the era of LLMs

We can consider AI development in three principal phases.

Phase 1: Classic Machine-Learning

The first phase was what's often described as classic ML and encompasses AI algorithms developed up until 2012. This era preceded the first deep neural network being successfully applied to win the ImageNet competition in September 2012 (AlexNet). It was characterized by manual feature engineering, where human experts had to carefully design and select which data features the model would use, and training models with relatively smaller, structured datasets. Popular algorithms included decision trees, support vector machines (SVMs), random forests and linear regression models.

Phase 2: Deep Learning

This phase was defined by the use of deep neural networks, which are models containing connected "neurons" (inspired by the human brain). Their strength was the ability to automatically learn what features to care about without needing manual feature engineering, provided a sufficiently large dataset. Specialized networks were developed, like convolutional neural networks (CNNs) for computer vision and recurrent neural networks (RNNs) and derivatives for handling sequential data like text. The main limitation was that each model still performed just one task. You'd train one model to classify X-rays, another to extract entities from clinical notes, and yet another to predict patient outcomes, with each task requiring its own dataset, training pipeline, and maintenance overhead.

Phase 3: Large Language Models

The third phase, from late 2022 until now, centers large language models (LLMs) and foundation models. These models represent a paradigm shift: instead of training separate models for each task, a single pre-trained model can handle multiple tasks through natural language instructions alone. LLMs like GPT-5, Claude, and Gemini are pre-trained by researchers and engineers on vast amounts of text data, developing broad capabilities that can be directed toward specific applications through prompting and fine-tuning. In healthcare, this means the same model can summarize clinical notes, answer patient questions, extract structured data from unstructured text, and assist with diagnostic reasoning, without any specific retraining required.

So what does this mean?

LLMs work out-the-box and generalize across tasks

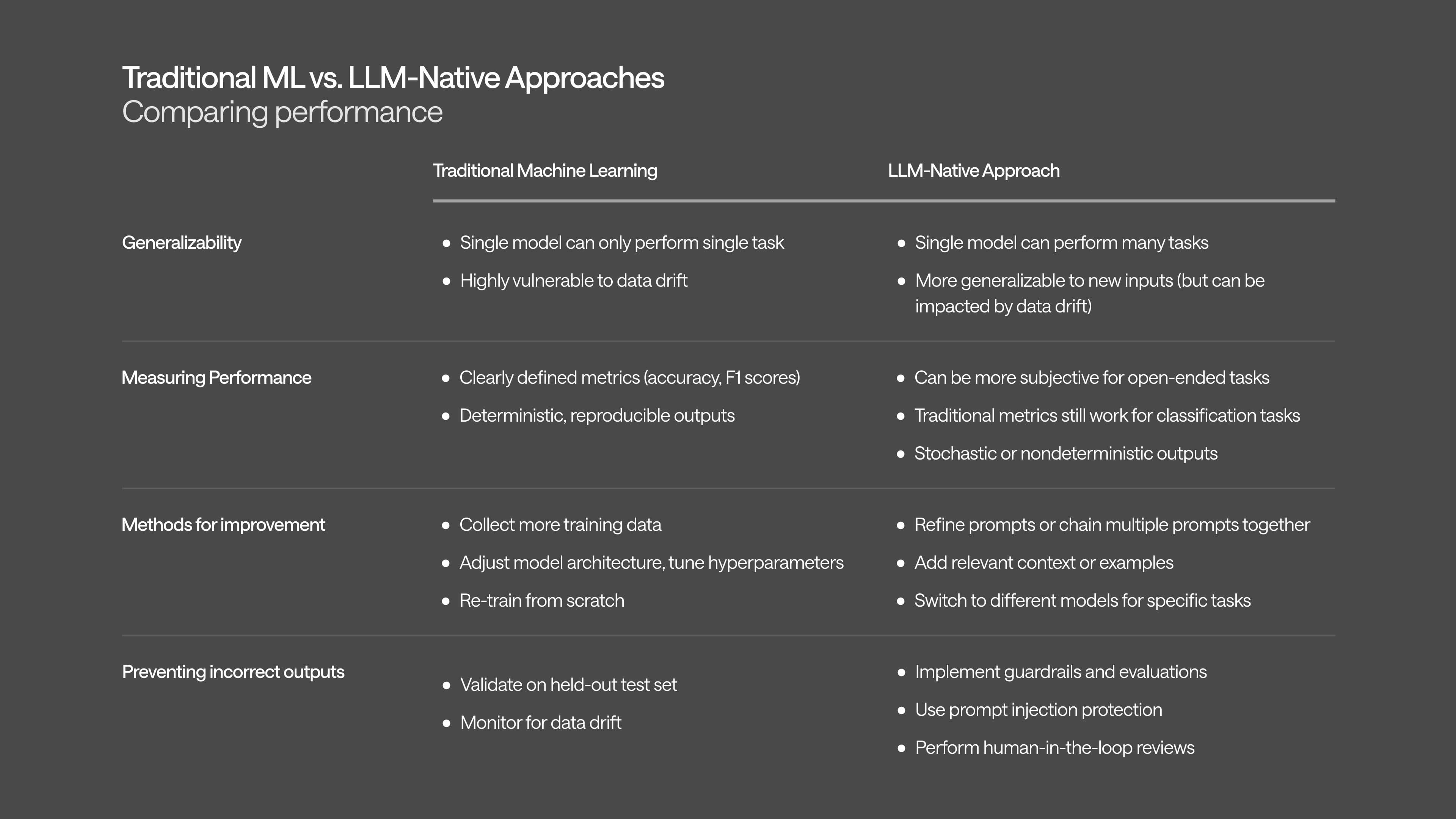

Leveraging LLMs represents a new paradigm, with major advantages over traditional deep learning approaches accompanied by some new challenges.

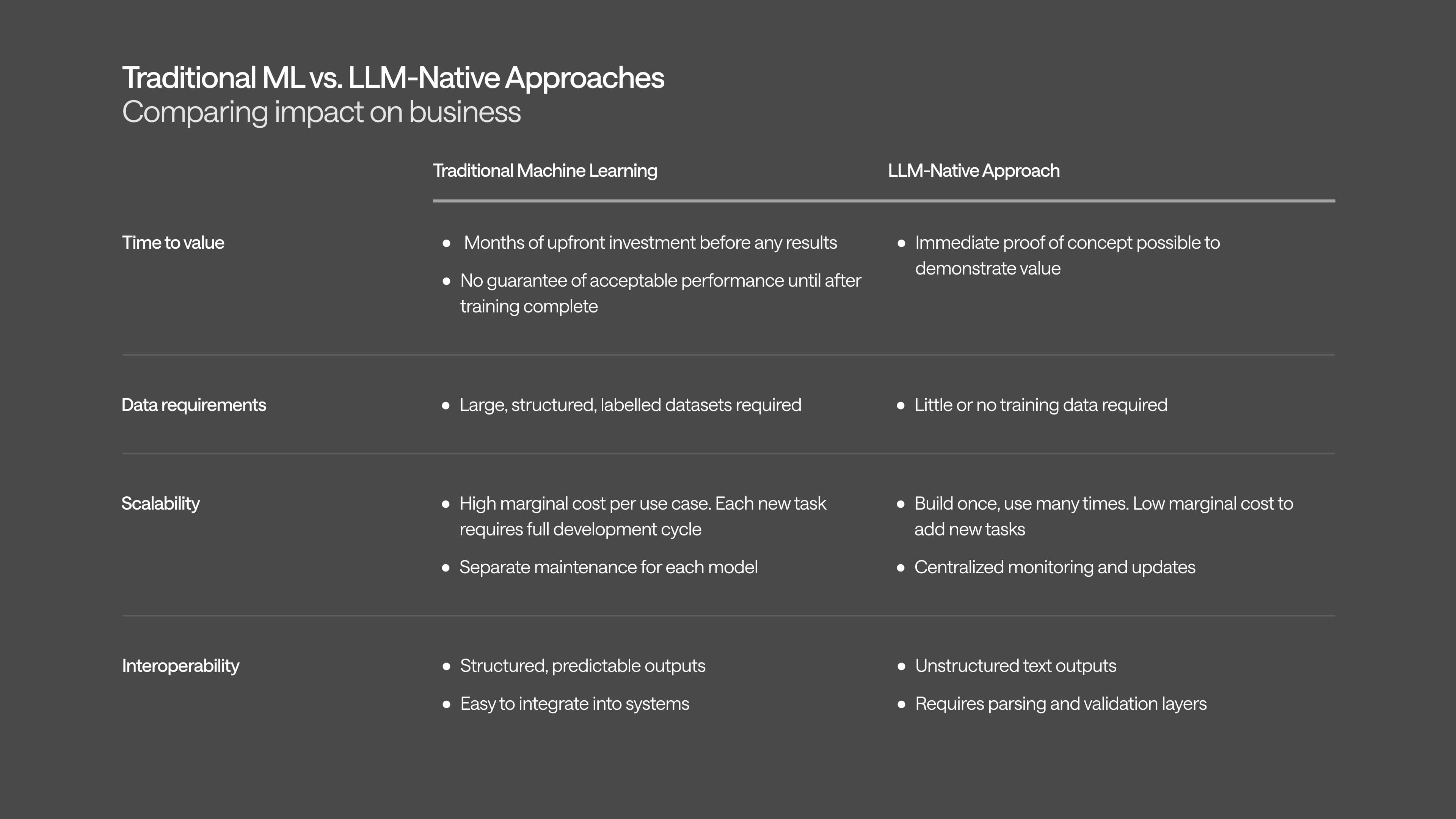

The first unlock comes from the fact that LLMs exhibit strong performance for most tasks without any additional training required. Out-of-the-box LLMs have been trained to be able to generalize to new tasks, so the remaining work is to build the software around them that passes them the information required and prompts the models in the appropriate way. Doing so requires a significantly smaller investment of time and resources.

The value unlock is extended by the ability of the LLMs to generalize. Unlike with traditional deep learning, the same model can be used for multiple tasks. So once the LLM infrastructure is set up, the marginal cost of adding a new use is dramatically lower.

This is supported by the fact that LLMs are much more flexible in the input data that they can handle. With traditional deep learning, when a model has been trained for its particular task, it requires data in the same format as its training data in order to work. LLMs, in comparison, can handle a wide range of data in an unstructured format, provided it fits into their context windows.

This greater flexibility and generalizability comes with its own challenges, however. Although increasingly less common, LLMs can hallucinate information that doesn’t exist. Their behavior is less predictable because they’re stochastic or nondeterministic, so the same input may not necessarily give the same output. Their outputs are unstructured text which can represent a challenge for interoperability (especially important in healthcare). There are additional attack vectors for malicious actors, such as prompt injection (where malicious instructions are inserted into a prompt), sensitive data leakage, and model poisoning. Together, these challenges mean that successful deployment of AI requires building an LLM-native platform.

Building an LLM-native platform requires a new approach

An LLM-native approach to building AI products is characterized by:

- Breaking down real-world workflows into atomic tasks with defined inputs and outputs. Each atomic task may then be performed by a single LLM input/output pair through "prompt chaining’" AI expertise helps to design the appropriate interfaces between tasks. This enables you to optimize performance for each individual task (which we’ve written about more here: Decomposing Real-World Payer Workflows into Agent-Ready, Evaluable Tasks)

- Leveraging a constellation of LLMs. Different LLMs have different strengths and weaknesses. To achieve the best performance, you want to match the right LLM to the right task.

- Making data-driven decisions through evaluations. Evaluations consist of running LLMs against test data points and comparing the quality of the outputs. At scale, this evaluation data is critical for decision-making, such as which model to use, which prompt to use and which workflow to use for each specific use case.

- A mechanism for incorporating real-world behavior and/or understanding into the AI system. AI systems are only as good as their understanding of the context in which they operate and the way things are normally done. Techniques include asking domain experts to write domain knowledge for context augmentation or performing human reviews of AI outputs to generate examples for in-context learning.

Going forward

We’re in a new era of AI. To win, you need to understand the new rules. This doesn’t mean abandoning the foundations of traditional deep learning or classical machine learning entirely—those methods still play a critical role in building robust, interpretable systems. The real opportunity lies in combining these approaches with LLMs to create hybrid architectures that are not only powerful but also context-aware.

As organizations move toward deploying LLMs at scale, success will increasingly hinge on adopting vertical-specific approaches—models and workflows designed around the nuances of a given industry, from healthcare and finance to logistics and education. These tailored systems can leverage domain-specific data, ontologies, and language patterns to deliver greater accuracy and trustworthiness. They also offer a natural path to mitigating risk. For instance, synthetic data generation can be used to rigorously test model behavior in sensitive or rare edge cases without exposing real-world data (especially important when considering data privacy concerns). Controlled fine-tuning pipelines and domain-aligned evaluation frameworks can further help detect bias, ensure regulatory compliance, and strengthen safety controls.

The next phase of AI innovation won’t be defined by who can access the biggest model — but by who can shape these models most effectively for their vertical, balancing innovation with resilience, precision, and accountability.