Your AI brain is useless… unless you build a nervous system too i.e., healthcare-grade AI infrastructure.

In biology, the nervous system is what makes intelligence usable. It transmits signals, orchestrates action, and keeps organisms agile in dynamic environments. Without it, even the most advanced brain is paralyzed—unable to respond, adapt, or scale.

Healthcare needs a clinical AI brain, but it also needs a nervous system i.e., AI infrastructure that is responsive, precise, and resilient under pressure.

When the nervous system overloads, everything stops

Consider the upcoming federal Electronic Prior Authorization (ePA) mandate: by 2027, payers will be required to exchange clinical data and authorization decisions via standardized APIs. That shift will make AI deployment inevitable.

Instead of phone calls and PDFs, prior auth will flow through digital rails: structured FHIR payloads, A2A connections, and yes, still some faxes. But as volume explodes, so will the operational pressure to automate. Millions of cases per day will become candidates for AI decision support.

And these aren’t narrow, templated requests. They involve fragmented records from provider notes, medication histories, and imaging reports. Every case must be evaluated for medical necessity with traceable logic, low false positives, and rapid turnaround. Infrastructure is what makes that possible—or impossible.

If the infrastructure (”nervous system”) can’t keep up, the consequences will cascade across the healthcare system. Patients may wait days or weeks for critical treatments as prior auth decisions stall in overloaded queues. Clinicians will spend more time on phone calls and appeals than at the bedside, deepening burnout and worsening staffing shortages. Compliance risks will emerge as payers miss federally mandated response times, inviting audits, fines, and reputational damage.

Put simply: the nervous system we need isn’t one-size-fits-all middleware. It’s a healthcare-native AI backbone—purpose-built, clinician-aware, and operationally grounded.

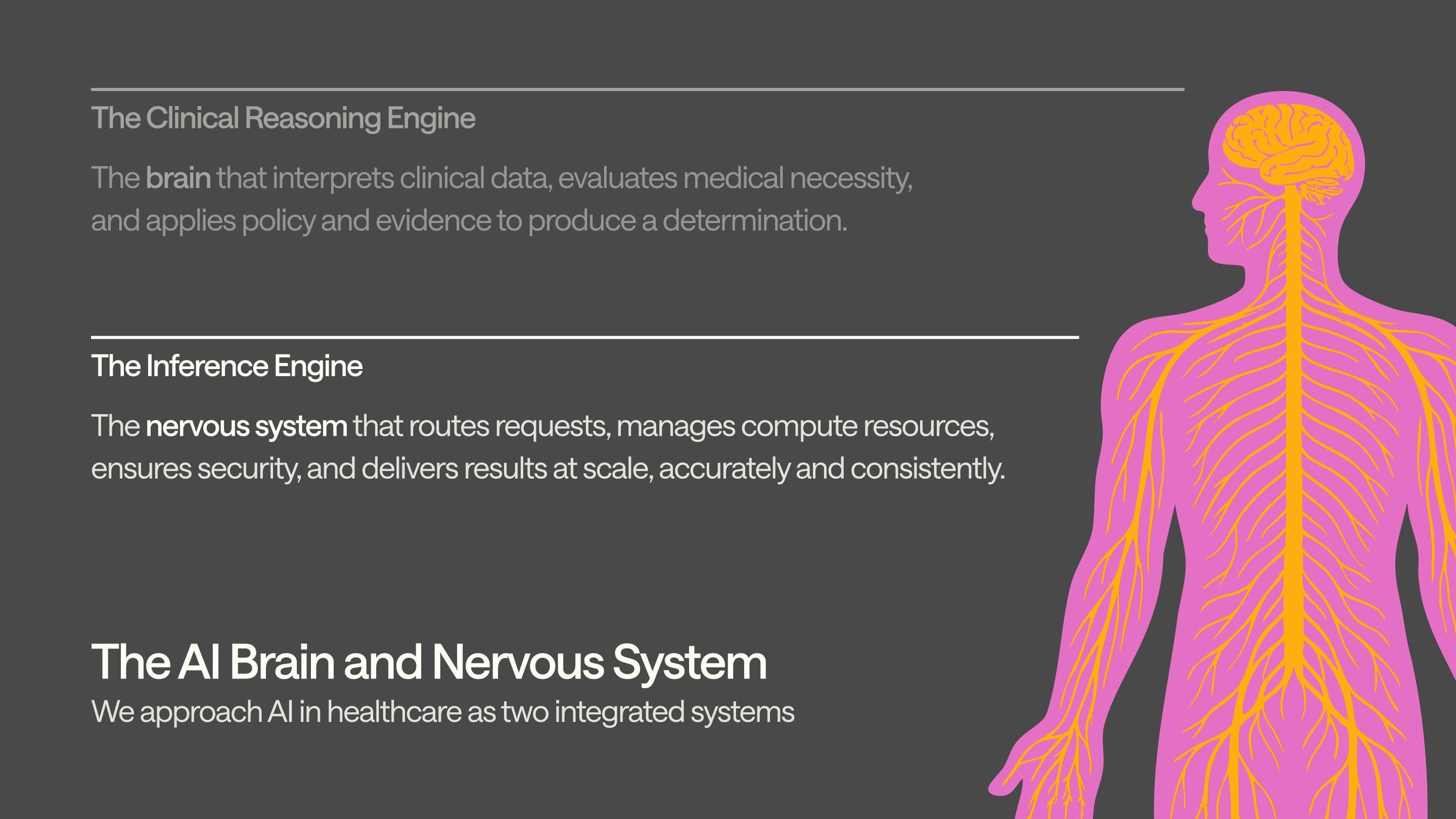

We’ve written about how we’re building an AI (clinical) brain separately, but here, let’s focus on what often gets overlooked: the nervous system that enables the brain.

General-purpose "horizontal" systems cannot keep the body moving

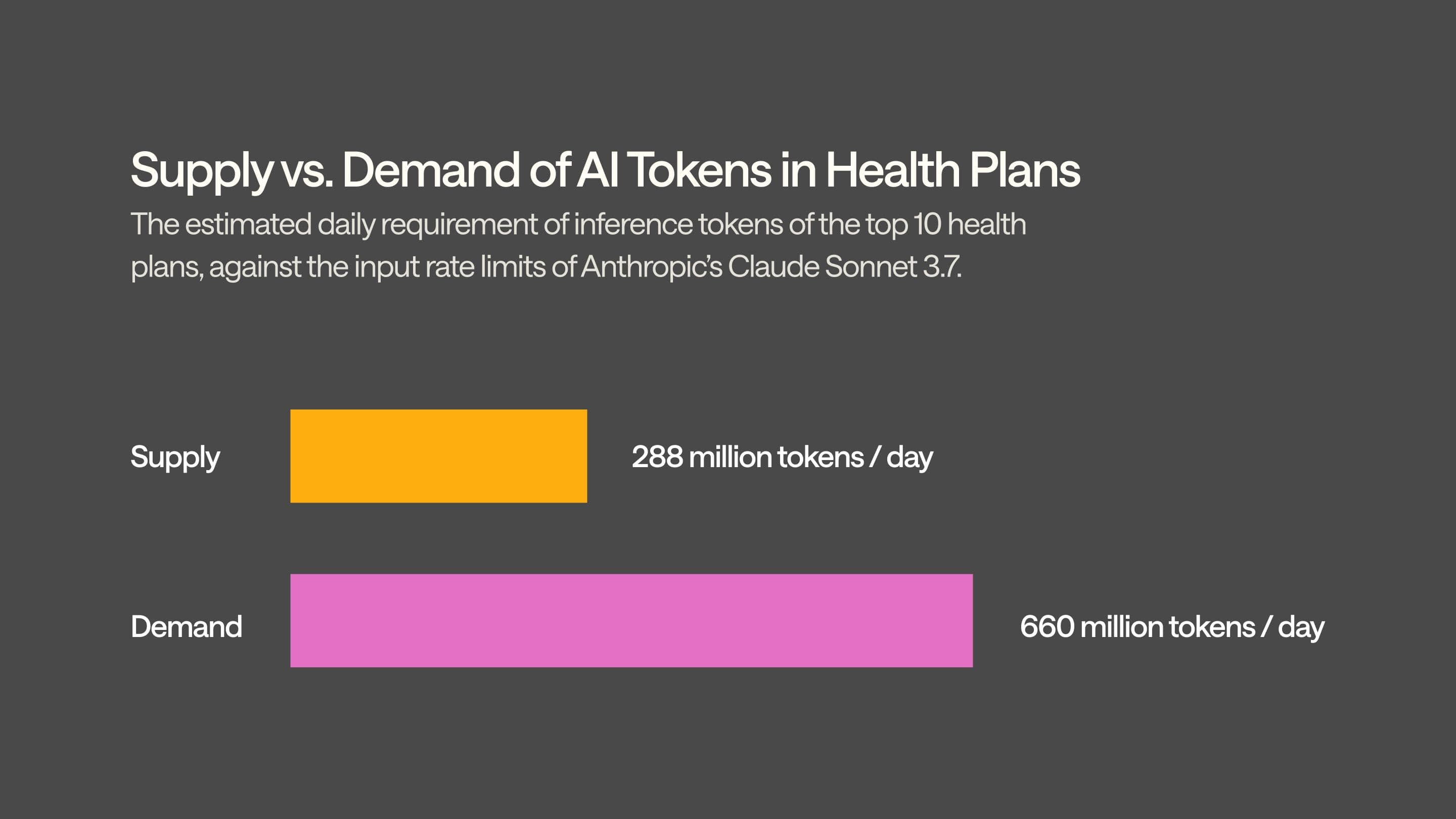

It starts with scale. A single prior authorization case can involve 50,000 words of clinical data—roughly 66,000 tokens. At one million cases per day, that’s 66 billion tokens of processing every 24 hours. Spread across just ten large health plans, each would need 660 million tokens per day. That’s more than double what Anthropic currently allows even for its top-tier enterprise customers. And that’s just for prior auth—before you touch appeals, risk adjustment, or member services.

Next is reliability. Healthcare decisions can’t tolerate downtime. But general-purpose providers don’t guarantee the uptime, fallback routing, or deterministic behavior needed to support time-sensitive, life-impacting workflows. If the model stalls, patients wait. If it fails, care is delayed. These systems are impressive but weren’t built to operate as core infrastructure in regulated clinical environments.

Then there’s transparency and control. Most hosted AI platforms obscure key performance levers. They don’t expose options like quantization settings, chunked prefill, or speculative decoding strategies—parameters that materially affect speed and accuracy. They silently apply speculative decoding to appear fast, but the tradeoff is possibly degraded reasoning quality., and you can’t fix what you can’t see.

Customization is another wall. General providers restrict fine-tuning and experimentation. You can’t adapt models to payer-specific policies, local documentation norms, or emerging architectures like state-space models. And you certainly can’t co-develop features with clinicians in the loop. You're buying a black box—useful for writing emails, but unfit for life-critical decisions.

Finally, compliance matters. It is not enough to meet the requirements for HIPAA, HITRUST, or data residency. You need to be able to trace inferences, audit workflows, or guarantee data isolation.

General-purpose, horizontal AI wasn’t built for this kind of load, this level of risk, or this kind of precision. Healthcare needs infrastructure that’s not just smart—but purpose-built to carry the weight.

At Anterior, we realized this early. And we knew we couldn’t wait for someone else to solve it.

This is why we built a healthcare-grade AI nervous system

We built our own inference engine i.e., a clinical nervous system designed to carry real decision-making at real-world scale.

Our inference engine doesn’t just call models, it orchestrates them. Every request, whether it's a prior auth determination or a risk adjustment pass, is routed through a system we control end-to-end. That means we decide which model runs when, how it’s configured, how it fails over, and how the output is normalized. It means we can guarantee determinism where it matters and flexibility where it counts.

We self-host so we can do what others can’t: tune performance down to the token. We run models through frameworks like vLLM, allowing us to control chunked prefill, speculative decoding, quantization strategies, and memory-efficient serving methods. If a configuration improves throughput or reliability for a specific use case, we can test it and roll it out within hours. We’re not tied to another vendor’s roadmap.

Cost matters, too, so we don’t accept infrastructure overhead as inevitable. We use spot instances for non-urgent jobs, apply GPU sharding and tensor parallelism for large models (to solve for healthcare scale), and squeeze performance from every kernel. The result: enterprise-grade inference at a lower price than public APIs, with better control and uptime.

But the real difference is how we build. “Horizontal” players pair engineers with product managers. We pair them with (our own) physicians so when something needs tuning, a clinician is in the room, and the models learn from the people who practice medicine. And, crucially, that loop—from observation to experiment to implementation—can close in a single afternoon.

And we don’t just meet security standards—we surpass them. Our infrastructure is HIPAA- and HITRUST-ready, and our nervous system runs on Zero Trust architecture by design. No one (not even our engineers) can access patient data.

Because compliance is more than just the certificate and standard at Anterior. Most vendors stop at certificates. And AI standards in healthcare are still catching up. We're not waiting around. Staying secure means knowing both healthcare and tech—deeply. We do. That’s why we’re not just checking boxes. We’re setting the bar.

All of this isn’t general-purpose AI with a healthcare wrapper. It’s a healthcare-native AI nervous system, built to carry intelligence safely and scalably through the arteries of American healthcare.

Choose a partner with a brain… and a nervous system

The question for payers is not whether to adopt AI. It’s who to adopt it with.

Building and maintaining a healthcare-grade inference engine internally is not just expensive—it’s deeply distracting. It pulls resources from core data infrastructure, demands rare engineering talent, and requires constant tuning to remain compliant, performant, and explainable.

But horizontal AI platforms also weren’t designed for the realities of healthcare. They don’t natively understand structured medical policy. They weren’t built to support justification logs, or to ensure that determinations are reproducible when challenged.

Our customers know they need a healthcare-native AI partner. This means teams that have built both the clinical reasoning and the infrastructure required for high-stakes scale, and partners who’ve sat inside payer operations and know what it feels like when a queue stalls, a provider calls, and a member’s in the middle.

In healthcare, infrastructure issues won’t just break SLAs, they will disrupt care.